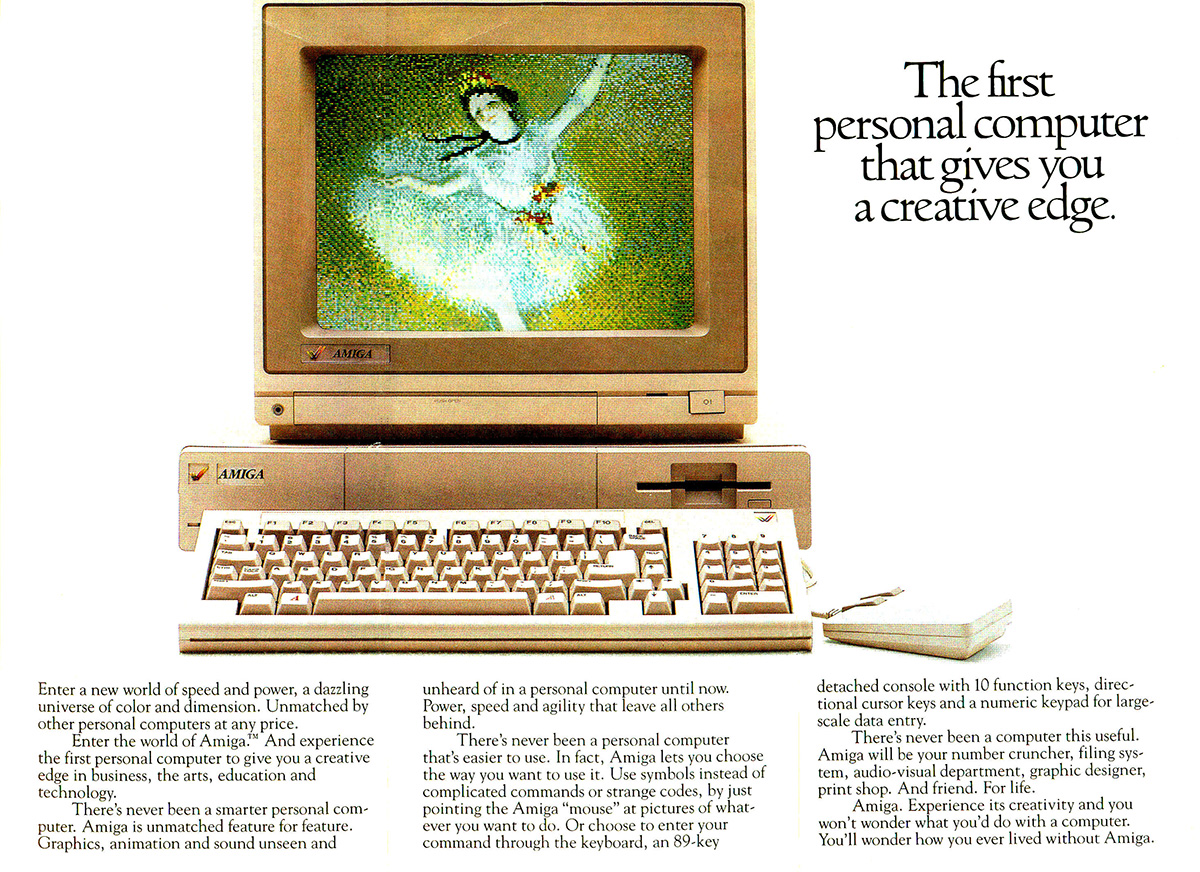

The Amiga represented an evolutionary leap forward in computing’s creative potential.

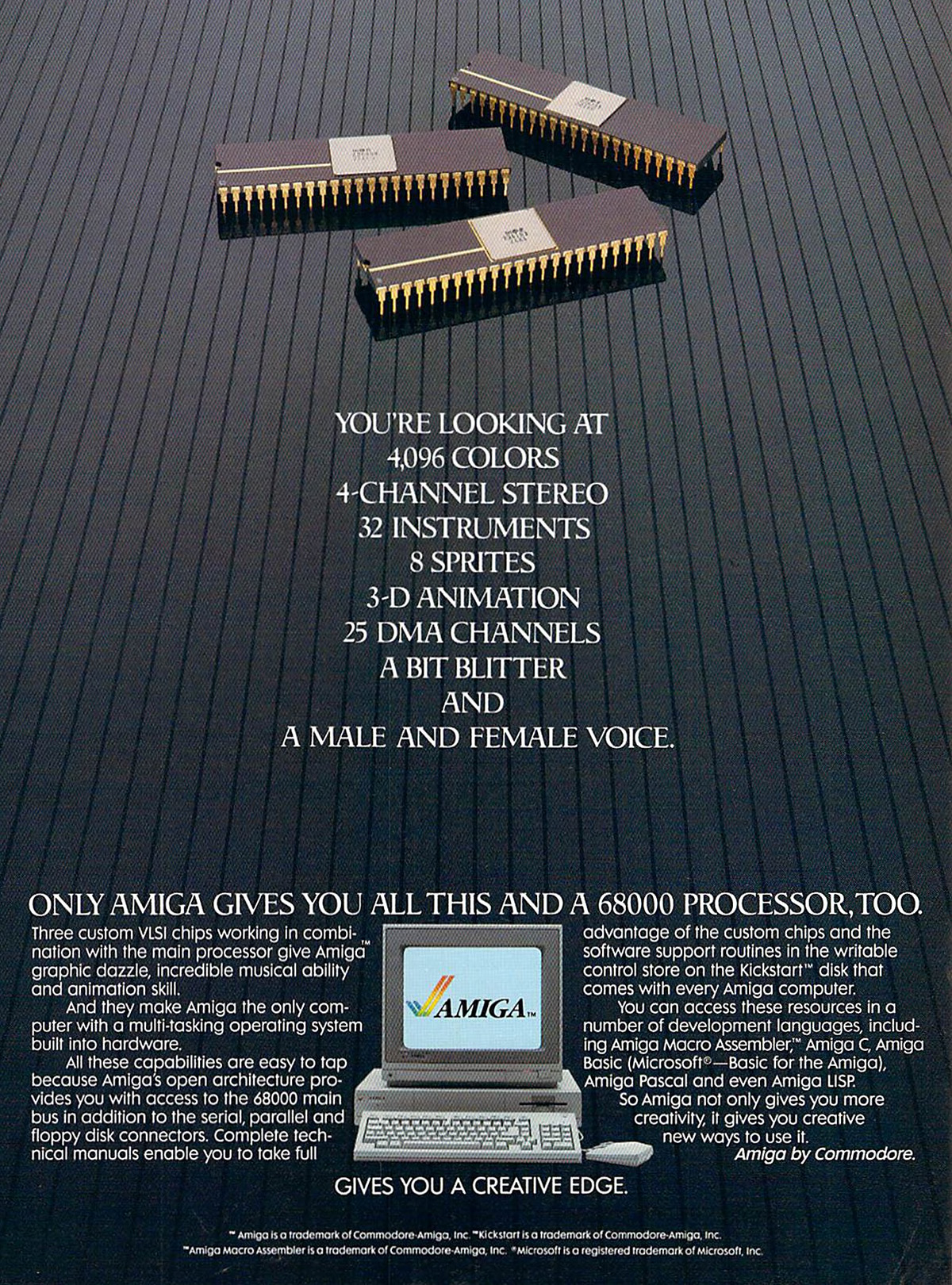

While colour computer graphics were not a new invention, the Amiga provided a video mode capable of displaying 4096 colours – previously unprecedented in a consumer computer.

This allowed for stunning photorealistic images.

Not only a feast for the eyes, but the ears too!

The Amiga also featured four digital sound channels that could play back samples directly from the computer’s memory, reproducing acoustic instruments and vocal tracks, and bursting forth surprisingly good musical arrangements.

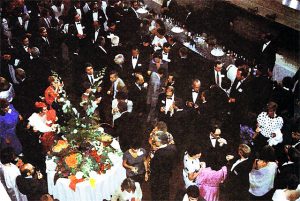

On July 23rd, 1985 the Amiga premiered at a lavish party at the Lincoln Center in New York City.

On July 23rd, 1985 the Amiga premiered at a lavish party at the Lincoln Center in New York City.

The Amiga debuted to a black-tie audience of press, software and hardware developers, Commodore shareholders, investors and celebrities.

The Amiga was presented as a bridge between technology and creativity, as a new kind of computer that could fill any need, be that artistic, or scientific, or business-related. A small company could get a single Amiga that would take care of all their computing tasks, or a large corporation could buy a fleet of them, each designated toward a particular purpose, but capable of taking over for any of the others should the need arise.

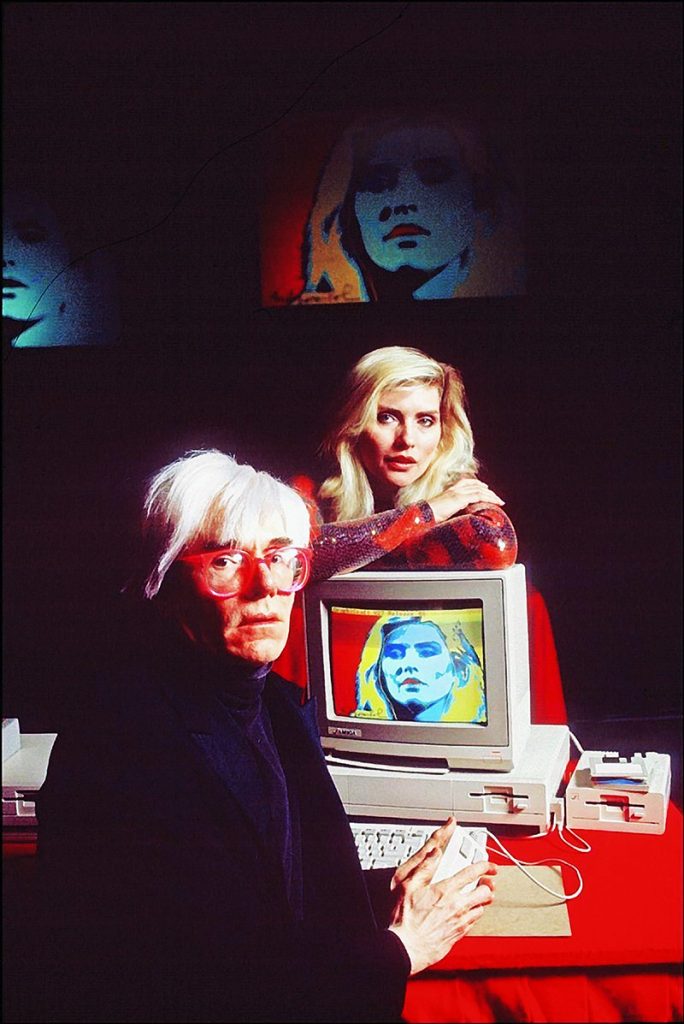

But while the Amiga could be argued as being a Jack-of-all-computing-trades, the evening in New York City was certainly more sharply focused on its creative abilities, as demonstrated on stage by pop-art sensation Andy Warhol, digitally painting musician Debbie Harry (aka Blondie).

Who do you get when you want to impress the 1980s art world? Why, Andy Warhol, of course!

Who do you get when you want to impress the 1980s art world? Why, Andy Warhol, of course!

The Amiga also showed off its musical skills, accompanying saxophonist Tom Scott in a live performance.

John Shirley, then-President of Microsoft was on hand to assure attendees his company would be supporting the Amiga by developing a new version of BASIC – although the 16-bit era would largely kill BASIC as a programming language, interpreters not generally being built-in, and BASIC compilers uncommon.

But the star of the show was the Amiga, displaying dazzling digital works of art and animation throughout the evening on three overhead screens. The Amiga even ‘spoke’ to the audience via speech synthesis, drawing applause.

Developers showed prototypes of their Amiga products while much discussion took place regarding the Amiga’s future: what new applications would be discovered for it? How would it change the computing landscape going forward? What would its fortunes ultimately be?

Of course, we now know the answer to those questions, looking backward from our perch in the present, but that evening, as with many technology launches in the 1980s, the room was abuzz with speculation, hope and excitement for a future it was expected would be full of wonder and amazement.

And it was.

Jay Miner’s masterpiece was exactly that.

To understand just how much of a masterpiece it was, you need to have a sense of the personal computing market of the day. Most computers still being sold in 1985 were 8-bit and had very simple graphics with modes with less than 200 horizontal pixels and at most 16 colours (the Atari 400/800 series had additional ‘shades’ but there were still only 16 base colours.) Many people still owned computers capable of much less.

Even the 16-bit Macintosh only had monochrome (in the truest sense – a pixel could only be either black or white) graphics at 512×342 pixels, which was great for print publishing but if you wanted to express even a single a shade of gray you needed to use half-toning, which decreased your effective resolution to half of that. And so the idea of a consumer machine with 4096 colours to choose from and resolutions of up to 640×512 pixels that cost less than half that of a Macintosh was shocking to say the least. Add on top of that four-channel digital sound (the Macintosh had one, and most contemporary 8-bit computers had analogue synthesisers of varying complexity) and you had a computer that seemed to most users like a technological fantasy that popped out of a wormhole from the future, not something they could just go out and buy from their local computer shop next week.

So how did Jay Miner, the Wizard of Amiga, manage this modern miracle? The solution was custom-designed chips, which were much cheaper to manufacture than circuits made up of off-the-shelf components. Miner was not new to custom-designed chips – in the mid-1970s he led the team that combined a number of the components in the design of the Atari 2600 into a single chip known as the Television Interface Adaptor, or TIA. The TIA aimed to remove the need for video RAM by rendering game objects directly to the television screen, one line at a time. This increased the complexity of programmed games, but greatly reduced the cost of the Atari 2600, placing those games in the hands of many more gamers.

So how did Jay Miner, the Wizard of Amiga, manage this modern miracle? The solution was custom-designed chips, which were much cheaper to manufacture than circuits made up of off-the-shelf components. Miner was not new to custom-designed chips – in the mid-1970s he led the team that combined a number of the components in the design of the Atari 2600 into a single chip known as the Television Interface Adaptor, or TIA. The TIA aimed to remove the need for video RAM by rendering game objects directly to the television screen, one line at a time. This increased the complexity of programmed games, but greatly reduced the cost of the Atari 2600, placing those games in the hands of many more gamers.

Miner moved on to leading teams that designed two custom video chips for Atari’s subsequent home computer systems, known as the ANTIC or Alphanumeric Television Interface Controller chip, and the CTIA or Colour Television Interface Adaptor. The ANTIC chip could read directly from memory (without needing the CPU) and render multiple sizes of text to the screen as well as arbitrary graphical data, and provided CPU ‘interrupts’ that allowed software to execute drawing routines during the Vertical Blank Interval (VBI) at the end of a CRT’s raster scan while its electron gun was inactive.

The ANTIC then sent the video information to the CTIA, which was based on the Atari 2600’s TIA chip and responsible for generating and colouring the video signal. The CTIA could also generate game objects on its own, known as Player/Missile Graphics like the TIA, but also like the TIA it had no memory, and so the ANTIC had to feed data to the CTIA line-by-line like Atari 2600 programmers had been forced to with the TIA. But combined the two chips provided powerful graphics capabilities (by 1979 standards) and helped make the Atari 800 (in particular) and later models successful.

Miner soon began to lobby Atari to start development of a 16-bit gaming console to succeed the Atari 2600 but Atari management felt a console version of the Atari 800 (to be known as the Atari 5200) would be sufficient for the foreseeable future. With nothing of consequence to achieve Miner left, taking a number of other engineers with him, and forming his own company, called Hi-Toro. This was a mostly amicable parting – Atari later provided half-a-million dollars in capital to keep Hi-Toro going, in exchange for first rights to whatever resulting chipset Hi-Toro created.

Hi-Toro then began work on what would become the Amiga.

The ensuing corporate shenanigans having already been adequately described in the ‘fairy tale’ that preceded this article, we won’t waste time revisiting it, and instead focus on the technical aspects of Miner’s eventual creation.

The heart of the Amiga was and had always been the Motorola 68000 processor – it was the catalyst for Miner to want to develop a 16-bit system in the first place. First released in 1979 it was a nerd’s dream – although 16-bit externally, it was 32-bit internally which meant it could do incredibly complex operations at a much faster speed than contemporary 8-bit processors. However, that performance came at a price that was too high at that time for a mass-produced consumer product based on it to be practical – which is why Atari turned Miner’s proposal down.

But Miner realised that by the time all of the associated hardware and operating system software had been developed the price of the 68000 was likely to have come down within the required price range for a consumer product to be profitable, and he was willing to bet his own future on it. During that time, his company would need to develop three chips: a memory controller, dubbed Agnus, a graphics processor known as Denise and an audio chip called Paula. The functionality spread between them was similar to the functionality shared by the ANTIC, CTIA and ‘POKEY’ chips used in the Atari 8-bit computer line, the latter of which provided sound output, keyboard and game controller input.

But Miner realised that by the time all of the associated hardware and operating system software had been developed the price of the 68000 was likely to have come down within the required price range for a consumer product to be profitable, and he was willing to bet his own future on it. During that time, his company would need to develop three chips: a memory controller, dubbed Agnus, a graphics processor known as Denise and an audio chip called Paula. The functionality spread between them was similar to the functionality shared by the ANTIC, CTIA and ‘POKEY’ chips used in the Atari 8-bit computer line, the latter of which provided sound output, keyboard and game controller input.

Of the three Amiga women, Agnus was the boss of the operation. All other chips, even the 68000, had to access the Amiga’s memory through Agnus. This meant they couldn’t attempt to write to memory at the same time – a problem known as write contention. Instead, they had to request accesses via Agnus, who was able to prioritise the requests and keep the overall system running smoothly.

But Agnus also had other roles pivotal to the Amiga’s graphical capabilities. Firstly, she had a ‘blitter’, short for ‘BLock Image TransferrER’. Agnus’s blitter could rapidly copy large blocks of video memory without needing the CPU, such as when the user moved windows in the Graphical User Interface, or GUI. It could also fill areas of the screen with colour and draw lines, either solid or using a repeating pattern.

Secondly, Agnus had the Copper. Short for ‘co-processor’, the Copper could execute a series of instructions in sync with the video hardware. The Copper could be used to switch between video resolutions mid-frame, allowing programs to, for example, use a higher resolution for a menu bar, and a lower, more colourful resolution for the remainder of the screen. Or, two programs could be shown on screen at once. Or change the colour palette each scanline, allowing for a different 16 (or 32) colours on each line. Or create rainbow ‘raster bars’ often used in games.

Secondly, Agnus had the Copper. Short for ‘co-processor’, the Copper could execute a series of instructions in sync with the video hardware. The Copper could be used to switch between video resolutions mid-frame, allowing programs to, for example, use a higher resolution for a menu bar, and a lower, more colourful resolution for the remainder of the screen. Or, two programs could be shown on screen at once. Or change the colour palette each scanline, allowing for a different 16 (or 32) colours on each line. Or create rainbow ‘raster bars’ often used in games.

Finally, Agnus could synchronise the video timing to an external signal, which was important in the days of analog television as this was the only way you could ‘mix’ two video signals on the fly. This was known as ‘genlocking’. The Amiga could also output video without a background signal, which meant it was easy to overlay Amiga graphics on top of video, and much more cheaply than conventional alternatives, which made it popular with television stations and studios.

Denise, meanwhile, had the job of rendering the graphics information she received from Agnus into an NTSC or PAL video signal, typically either 320 or 640 pixels per horizontal line, using a palette of 2-32 colours. This process is pretty straightforward, but Denise had a few tricks of her own. Firstly, she could display more pixels per line – known as ‘overscan’– reaching the edges of a CRT display. Secondly, she could add an extra bit to each 5-bit 32-colour pixel which halved the brightness of the pixel if it was on – known as Extra Half-Brite (EHB) mode – effectively doubling the number of available colours to 64.

Thirdly, she could render graphics using ‘hold-and-modify’ or HAM mode, which rather than specifying each pixel from a palette of 16 colors, a block of six-bit pixel data could instead tell Denise to use the same colour as the last pixel, but modify either the red, green or blue component. Technically you could display all 4096 available colours, but in practice this required ensuring the 16 base colours you chose were appropriate for the image you were displaying. However, as the Copper was capable of changing the palette between scanlines, you could get around this limitation (known as ‘sliced ham’ or S-HAM mode, although this is less a mode and more of a technique.)

Fourthly, Denise could overlay one 8-colour screen on top of another; fifthly she could display ‘interlaced’ video that allowed for greater vertical resolutions than 200 pixels (although it didn’t look awesome); sixthly she could manage up to 8 independent sprites and finally, Denise handled mouse and joystick input. Whew! Busy woman!

The Amiga’s graphics were state-of-the-art. Its palette of 4096 colours was eight times larger than that of the Atari ST, and sixty-four times EGA’s – and in its special ‘HAM’ mode, it could display all of those 4096 colours at once! (The ST and EGA PC could only display 16 at a time). The Amiga was even capable of 16 colors in 640×480 – a resolution that on the Atari ST was monochrome in the true sense (only black or white). You could even ‘overscan all the way to 704×576 in PAL mode!

The Amiga’s graphics were state-of-the-art. Its palette of 4096 colours was eight times larger than that of the Atari ST, and sixty-four times EGA’s – and in its special ‘HAM’ mode, it could display all of those 4096 colours at once! (The ST and EGA PC could only display 16 at a time). The Amiga was even capable of 16 colors in 640×480 – a resolution that on the Atari ST was monochrome in the true sense (only black or white). You could even ‘overscan all the way to 704×576 in PAL mode!

Last but not least, there was Paula. Paula was a true DJ: she spun disks, talked alot and made noise. On the first front, Paula was able to access connected disk drives much more directly than most contemporary floppy disk controllers – an entire track could be read or written at once, rather than sector-by-sector like on other computing platforms, increasing the amount of available space and correspondingly increasing the amount of data available on a normally-720K capacity disk to 830K or more. Because of the lack of abstraction between the controller and the disk, Paula could also read other disk formats, including that used by the IBM PC or Apple II.

On the second front, Paula managed the Amiga’s serial port. But she didn’t have a buffer, which meant that communications programs had to be careful to get every byte at the exact instant they arrived, otherwise Paula would forget it. On the plus side, Paula could send and receive data as fast as it could be passed to her, meaning she could communicate at all standard bit rates and even some non-standard high-speed ones. But what Paula was really known for was her musical talents.

We’ll get into the evolution of computer music in another article, but what you need to know right now is that while we now live in a world where we take digital sound reproduction for granted, this wasn’t really the case in 1985. The compact disc had just been introduced and had achieved far from widespread adoption; virtually all other personal computers and videogame consoles made bleeps and bloops of varying complexity.

And so, Paula’s four channels of digital sound – two routing to the left audio output and two to the right – were a novelty. To make things even better, Paula could play digital samples (via Agnus) from memory, and playback was even prioritised!

This allowed for the use of ‘lifelike’ digital samples in games while requiring little effort from the CPU. But where Paula’s abilities really came into play on the creative front was in the field of music, most notably through the software program known as Ultimate Soundtracker, Inspired by the Fairlight CMI sampling workstation’s method of sequenced sample playback, Ultimate Soundtracker provided a straightforward way for composers (budding or professional) to harness Paula’s four audio channels, and allowed the playback of realistic-sounding music. Later ‘trackers’ used software mixing to add more channels, but the Soundtracker ‘MODule’ format would reign supreme for some time.

Together, Agnus, Denise and Paula made one formidable team! However, that team was not cheap, and at US$1285 for just the computer when it went on sale in August 1985 all many budding digital creatives could do was drool. And those that could afford it would have to wait – by October only 50 had actually been built, all of them testing or demonstration machines, and consumers wouldn’t actually get their hands on them until mid-November, meaning many potential Amiga owners had moved on and purchased another 16-bit computer, such as an Atari ST.

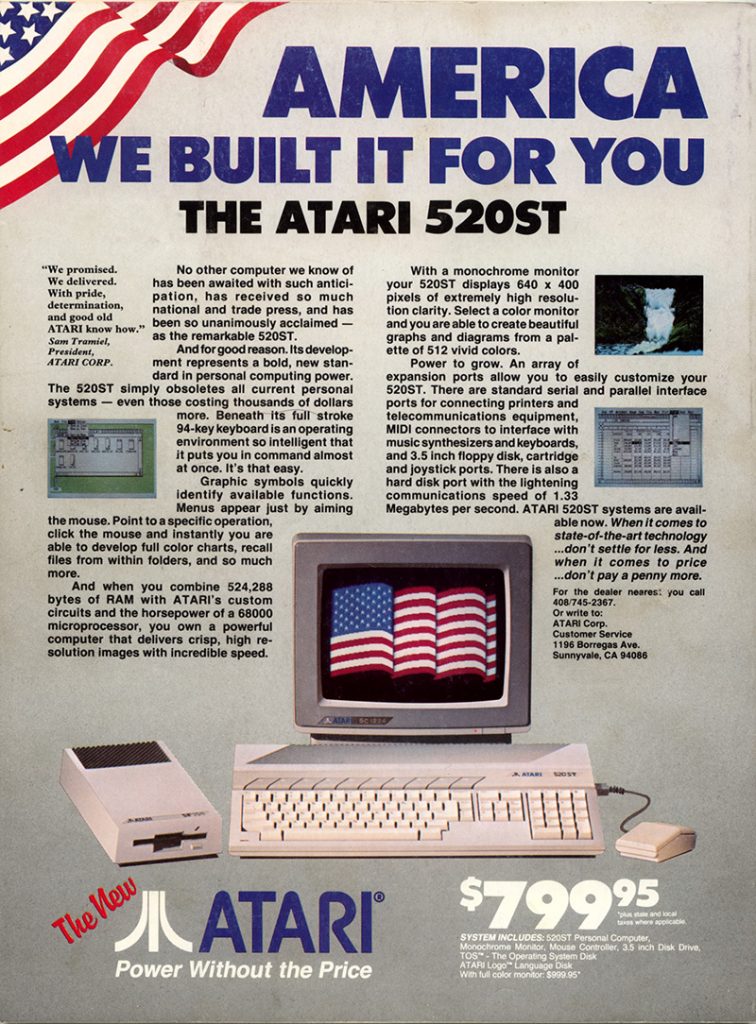

Not to be outdone, Jack Tramiel’s Atari Inc. launched its ST line of computers first, a few months ahead of the Amiga. While technically inferior in a number of ways, the “Jackintosh” was much cheaper when compared to the Amiga 1000, and featured hardware more similar to the arcade machines of the day, which made it attractive to casual computer users and gamers.

Like the Amiga and the Macintosh, the ST had a mouse and a graphical-user interface known as GEM, although it was incapable of multitasking. It only had a palette of 512 colours (in comparison to the Amiga’s 4096) and only 16 could be displayed on screen at once in the lowest resolution (320×200) and only 4 at medium resolution (640×200). Using a proprietary Atari monochrome monitor, ST users could also use a high-resolution 640×400 video mode, which was primarily used by word processing, desktop publishing and music applications, the last of which leveraged the ST’s built-in MIDI ports.

Derided as a videogame machine by Commodore, the ST found success amongst lower-income households, selling 50,000 units in the first four months of sales. It did particulary well in Europe, where exchange rates, taxes and profiteering made items like home computers much more expensive than elsewhere, and as such made the ST the only computer (and yes, videogame machine) many could afford.

Derided as a videogame machine by Commodore, the ST found success amongst lower-income households, selling 50,000 units in the first four months of sales. It did particulary well in Europe, where exchange rates, taxes and profiteering made items like home computers much more expensive than elsewhere, and as such made the ST the only computer (and yes, videogame machine) many could afford.

The ST also had DOS and Macintosh emulators available for it, which made it attractive as a cheaper alternative for small businesses. Apple also attempted to jump into the 16-bit home computer fray with its IIGS system. Backward compatible with the 8-bit Apple IIe it had the advantage of a lot of available software, but it was really late to the party, coming out in September 1986.

Due in part to competition from the ST, by the end of 1985 Commodore had only sold 35,000 Amigas, causing it to run into cashflow problems and forcing it to miss the Consumer Electronics Show in January, 1986. To make matters worse, early models suffered from bugs, and the first cuts hit the marketing department, meaning the only thing driving sales was word-of-mouth, and that word was not always good.

Commodore’s management realised the Amiga 1000 had a critical problem: despite its advanced chipset, the machine built around it didn’t have sufficient specifications to appeal to the high-end creative market, while it was also too expensive for the average home user to casually purchase. And so they split the Amiga into two: the Amiga 500 and 2000.

The Amiga 500 was given a form-factor similar to the Atari ST, where the computer was built into the keyboard, and a 3.5 inch disk drive was placed into the side of the machine. The amount of memory was cut in half, from the 1MB of the Amiga 1000 to 512KB. Most importantly, the price of the 500 was US$699 – cut almost in half in comparison to the 1000, and making the 500 much more attractive to home users.

The 2000 meanwhile had internal expansion slots and space for a hard disk, a must-have for corporate users. This combination was a commercial success, and successive models of Amiga would follow a similar pattern of high-end and low-end variants. Ultimately, Commodore would reportedly sell around 5 million Amiga models, mostly in Europe and the UK.

But unfortunately, despite his success in turning the Amiga around, Commodore CEO Thomas Rattigan was forced out by majority shareholder Irving Gould (who had chased away Commodore founder Jack Tramiel years earlier). Development on the Amiga largely ceased, and its models were quickly overtaken in the market by technically superior competitors, such as Intel 386 and 486-based machines with VGA video hardware.

After a failed last-ditch effort to save itself by launching an Amiga-based videogame console, the CD32, in 1994 Commodore filed for bankruptcy. Its assets were purchased by German company Escom, which released a new model of Amiga with a better central-processing unit, but it wasn’t enough at Escom too went bankrupt in 1997. The Amiga’s time in the personal computer limelight was over.

After a failed last-ditch effort to save itself by launching an Amiga-based videogame console, the CD32, in 1994 Commodore filed for bankruptcy. Its assets were purchased by German company Escom, which released a new model of Amiga with a better central-processing unit, but it wasn’t enough at Escom too went bankrupt in 1997. The Amiga’s time in the personal computer limelight was over.

There is some disagreement over just how many units of the Amiga and the ST were sold. The rivalry between owners of the two has continued into the present day, with each side presenting figures that paint the other’s sales unfavourably.

We can attempt to derive sales from the number of games available for each platform, but with estimates placing both at around 2000 that’s not very helpful, although the similar numbers could be because it was worthwhile for software companies to “port” games from one platform to the other regardless of game sales made on either.

All we can say for sure is that the ST and Amiga were fierce competitors, but while the ST was cheaper, the Amiga was certainly prettier.

Be the first to comment