In the early days of computing, all computers were in effect personal computers – only one person could use them at a time! In the beginning, it was like using a calculator: you entered in an instruction, the computer gave a response, you entered in another instruction, and so on. Tedious and time-consuming.

Later on, mechanical systems were developed to automate the entry of instructions using stacks of punch cards. Programmers would punch holes that corresponded to their required computer instructions in these cards, and then the computer would execute them when the cards were eventually read, often in the middle of the night! The computer could still only do one thing at a time, but at least people didn’t have to physically wait in line.

However, while this was a practical solution for computer programs whose output wasn’t time-sensitive, it became understood that aerospace applications in particular highlighted a need for not only real-time processing and computation, but for multiple computer programs to run at the same time, and even exchange data with each other while doing so. This would require a computer to divide its time amongst these programs, keeping track of where it was in each, executing an instruction and moving on, a process known as “time-sharing”.

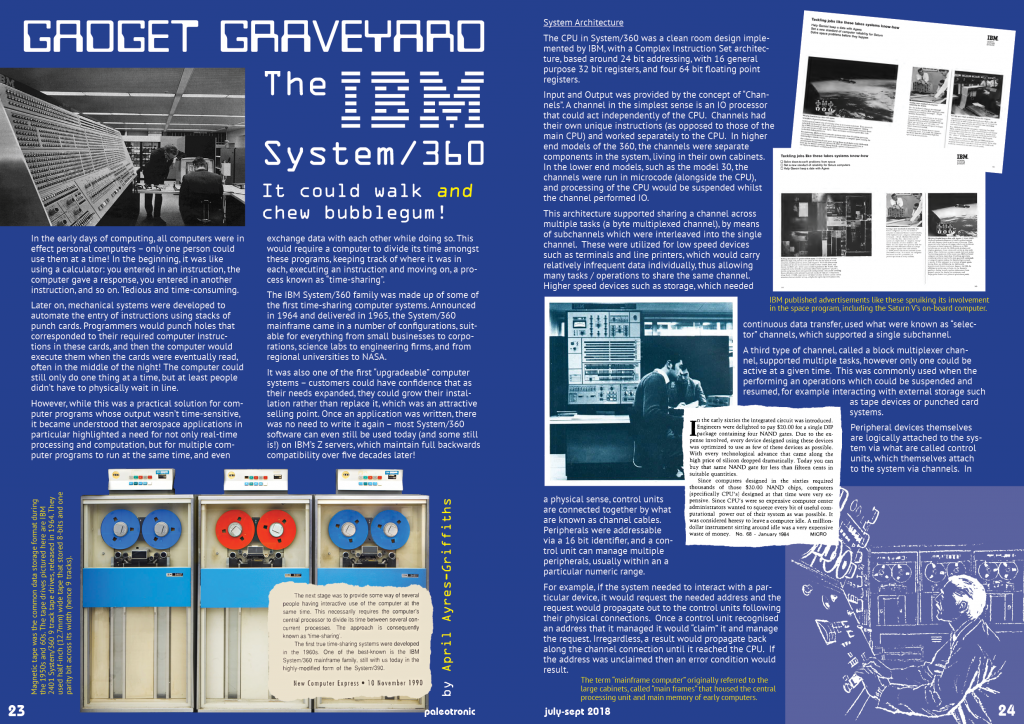

The IBM System/360 family was made up of some of the first time-sharing computer systems. Announced in 1964 and delivered in 1965, the System/360 mainframe came in a number of configurations, suitable for everything from small businesses to corporations, science labs to engineering firms, and from regional universities to NASA.

It was also one of the first “upgradeable” computer systems – customers could have confidence that as their needs expanded, they could grow their installation rather than replace it, which was an attractive selling point. Once an application was written, there was no need to write it again – most System/360 software can even still be used today (and some still is!) on IBM’s Z servers, which maintain full backwards compatibility over five decades later!

System Architecture

The CPU in System/360 was a clean room design implemented by IBM, with a Complex Instruction Set architecture, based around 24 bit addressing, with 16 general purpose 32 bit registers, and four 64 bit floating point registers.

Input and Output was provided by the concept of “Channels”. A channel in the simplest sense is an IO processor that could act independently of the CPU. Channels had their own unique instructions (as opposed to those of the main CPU) and worked separately to the CPU. In higher end models of the 360, the channels were separate components in the system, living in their own cabinets. In the lower end models, such as the model 30, the channels were run in microcode (alongside the CPU), and processing of the CPU would be suspended whilst the channel performed IO.

This architecture supported sharing a channel across multiple tasks (a byte multiplexed channel), by means of subchannels which were interleaved into the single channel. These were utilized for low speed devices such as terminals and line printers, which would carry relatively infrequent data individually, thus allowing many tasks / operations to share the same channel. Higher speed devices such as storage, which needed continuous data transfer, used what were known as “selector” channels, which supported a single subchannel.

A third type of channel, called a block multiplexer channel, supported multiple tasks, however only one could be active at a given time. This was commonly used when the performing an operations which could be suspended and resumed, for example interacting with external storage such as tape devices or punched card systems.

Peripheral devices themselves are logically attached to the system via what are called control units, which themselves attach to the system via channels. In a physical sense, control units are connected together by what are known as channel cables. Peripherals were addressable via a 16 bit identifier, and a control unit can manage multiple peripherals, usually within an a particular numeric range.

For example, if the system needed to interact with a particular device, it would request the needed address and the request would propagate out to the control units following their physical connections. Once a control unit recognised an address that it managed it would “claim” it and manage the request. Irregardless, a result would propagate back along the channel connection until it reached the CPU. If the address was unclaimed then an error condition would result.

A key plank of the architecture was the standardization of the way that components of the system interconnected via the control units and channels. This meant IBM was able to release a plethora of devices for System/360 which could be used by all systems, and made extending the capabilities of an existing system as easy as connecting additional needed components.

Many standard peripherals for the time, were supported of course, such as magnetic tape, paper tape and punched card readers and writers (such as the IBM 2540 which could read 1000 punch cards per minute). Data communications devices were also supported, including teletype devices.

CRT displays were available for the system, including the IBM 2250 and 2260 models. The IBM 2250 was actually a vector graphics based CRT terminal which IBM released in conjunction with System/360 which was able to render an arbitrary display list of vectors, it supported a light pen, and was quite revolutionary, carrying a built in vector based character set to make it easier to display textual information to users. However, it was way out of the price range of many, at $280,000 USD in 1965. The IBM 2260 was an albeit cheaper alternative, as a monochrome, electro-mechanical video display terminal, which also included a keyboard, and could operate at 2400 baud.

Direct access storage was supported as well, such as the IBM 2311 Memory Unit which could store 7.25 megabytes of data on a removable six platter magnetic disk. An IBM 2321 Data Cell could store up to 400 megabytes of data with an access time between 100 and 600 milliseconds, and contained ten data cells. A data cell could be changed in or out, and contained 200 strips of magnetic tape which could be accessed in a random access fashion. If a maximum of 8 devices were connected, the system could provide up to 3 gigabytes of storage. Memory (RAM) storage devices (such as an IBM 2361) could also be connected to the system to further extend system memory.

IBMs goal was to provide a flexible system that could grow with an organisations needs, allowing them to match as many price points as possible. In this aspect, it could be said that they succeeded.

Time Sharing

Time sharing, or sharing the processing resources between multiple interactive users was a concept gaining interest in the mid 1960s as interactive processing often featured significant periods of idle time. Working with the University of Michigan, IBM developed a modified version of the System/360 model 65 (the model 65M), which added a virtual memory and address translation support, via a component called a DAT (Dynamic Address Translation) box. This had the benefit of allowing multiple programs to effectively run in their own isolated virtual address spaces.

The system was initially intended to be a one of a kind, but IBM soon recognised that time sharing could be lucrative to pursue, and announced the model 67, which would build on the work done with University of Michigan and commercialise it, offering a special operating system, TSS/360 (Time Sharing System), that supported multiple concurrent users. To each user, it was as if they had access to their very own System/360 system. Eventually, IBM cancelled the TSS project, but not before it’s concepts had formed the basis of a multitude of other time sharing systems.

Languages

A number of languages were available over the years for the venerable System/360.

The first of course was Basic Assembly Language (BAL), which allowed the systems programmer to work the closest to the machine. Mnemonics were used to represent the wealth of CPU operations and the assembler would translate these to object code. The assembler was actually quite powerful, supporting relative addressing, named constants and storage and labels. It even supported programmer comments, of course these were stripped out of the final object code. In a lot of ways, it would set the standard for assemblers for future generations of systems, including for example the 6502.

Fortran IV was also available for the System/360 as a language which was a little easier to work with than the lower level assembly language. It was less machine specific than previous versions of the language, aiming to strive for standards compliance. The name itself was derived from the words “Formula Translation”, and it had its main applications in data processing and scientific computing. It was developed by IBM in the 1950’s and made its way to System/360 as a matter of course. Fortran is still employed to this day, for many scientific and mathematical applications, and went to spawn more commonly recognised languages, one of which was BASIC.

Around 1965, IBM started work on APL/360, bringing the APL language to the System/360 platform. APL was very powerful for data processing as it was an incredibly concise language and used arrays of data as its main data type, rather than individual values. Each operation had its own graphic symbol, which made for incredibly concise code. For example, all prime numbers between 1 and R (an arbitrary value) could be computed with the following program:

Early versions of APL/360 on the platform supported the concept of multiple concurrent users, making them in a sense their own time-sharing systems without the need for DAT hardware, as the abstractions could be handled by the APL environment itself.

Be the first to comment