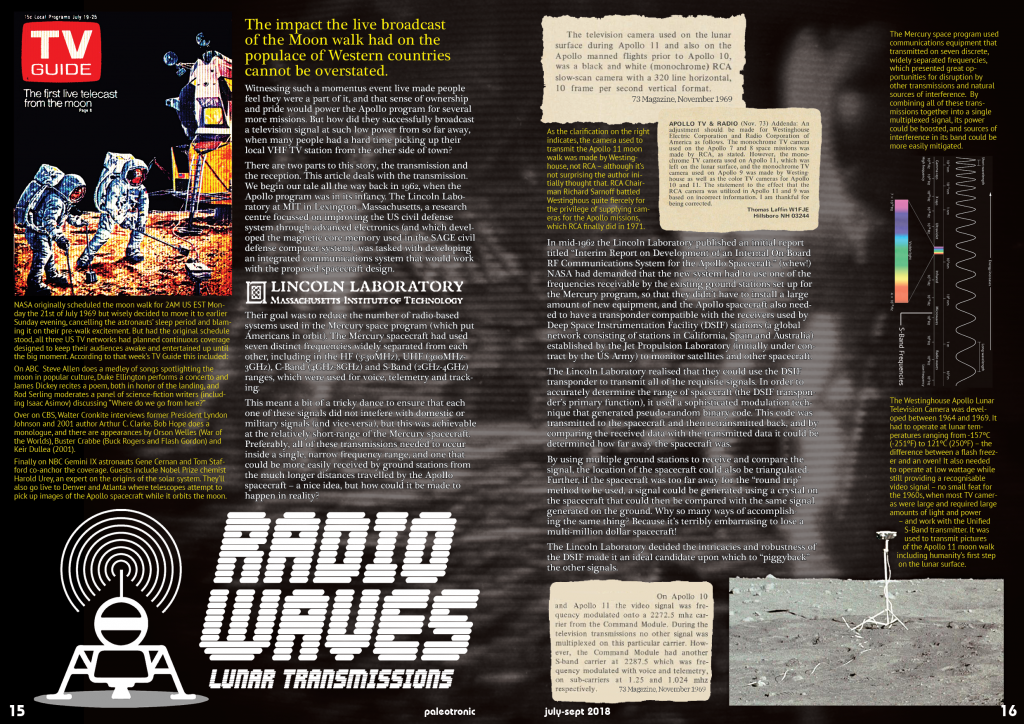

The impact the live broadcast of the Moon walk had on the populace of Western countries cannot be overstated.

On ABC Steve Allen does a medley of songs spotlighting the moon in popular culture, Duke Ellington performs a concerto and James Dickey recites a poem, both in honor of the landing, and Rod Serling moderates a panel of science-fiction writers (including Isaac Asimov) discussing “Where do we go from here?”

Over on CBS, Walter Cronkite interviews former President Lyndon Johnson and 2001 author Arthur C. Clarke. Bob Hope does a monologue, and there are appearances by Orson Welles (War of the Worlds), Buster Crabbe (Buck Rogers and Flash Gordon) and Keir Dullea (2001).

Finally on NBC Gemini IX astronauts Gene Cernan and Tom Stafford co-anchor the coverage. Guests include Nobel Prize chemist Harold Urey, an expert on the origins of the solar system. They’ll also go live to Denver and Atlanta where telescopes attempt to pick up images of the Apollo spacecraft while it orbits the moon.

Witnessing such a momentous event live made people feel they were a part of it, and that sense of ownership and pride would power the Apollo program for several more missions. But how did they successfully broadcast a television signal at such low power from so far away, when many people had a hard time picking up their local VHF TV station from the other side of town?

There are two parts to this story, the transmission and the reception. This article deals with the transmission.

We begin our tale all the way back in 1962, when the Apollo program was in its infancy. The Lincoln Laboratory at MIT in Lexington, Massachusetts, a research centre focussed on improving the US civil defence system through advanced electronics (and which developed the magnetic core memory used in the SAGE civil defence computer system), was tasked with developing an integrated communications system that would work with the proposed spacecraft design.

Their goal was to reduce the number of radio-based systems used in the Mercury space program (which put Americans in orbit). The Mercury spacecraft had used seven distinct frequencies widely separated from each other, including in the HF (3-30MHz), UHF (300MHz-3GHz), C-Band (4GHz-8GHz) and S-Band (2GHz-4GHz) ranges, which were used for voice, telemetry and tracking.

This meant a bit of a tricky dance to ensure that each one of these signals did not interfere with domestic or military signals (and vice-versa), but this was achievable at the relatively short-range of the Mercury spacecraft. Preferably, all of these transmissions needed to occur inside a single, narrow frequency range, and one that could be more easily received by ground stations from the much longer distances travelled by the Apollo spacecraft – a nice idea, but how could it be made to happen in reality?

In mid-1962 the Lincoln Laboratory published an initial report titled “Interim Report on Development of an Internal On-Board RF Communications System for the Apollo Spacecraft.” (whew!) NASA had demanded that the new system had to use one of the frequencies receivable by the existing ground stations set up for the Mercury program, so that they didn’t have to install a large amount of new equipment, and the Apollo spacecraft also needed to have a transponder compatible with the receivers used by Deep Space Instrumentation Facility (DSIF) stations (a global network consisting of stations in California, Spain and Australia) established by the Jet Propulsion Laboratory (initially under contract by the US Army) to monitor satellites and other spacecraft.

The Lincoln Laboratory realised that they could use the DSIF transponder to transmit all of the requisite signals. In order to accurately determine the range of spacecraft (the DSIF transponder’s primary function), it used a sophisticated modulation technique that generated pseudo-random binary code. This code was transmitted to the spacecraft and then retransmitted back, and by comparing the received data with the transmitted data it could be determined how far away the spacecraft was.

By using multiple ground stations to receive and compare the signal, the location of the spacecraft could also be triangulated. Further, if the spacecraft was too far away for the “round trip” method to be used, a signal could be generated using a crystal on the spacecraft that could then be compared with the same signal generated on the ground.

Why so many ways of accomplishing the same thing? Because it’s terribly embarrassing to lose a multi-million dollar spacecraft!

The Lincoln Laboratory decided the intricacies and robustness of the DSIF made it an ideal candidate upon which to “piggyback” the other signals.

In early 1963 the Lincoln Laboratory demonstrated the “Unified Carrier” concept to NASA. The system used phase modulation (PM). Phase modulation is similar to frequency modulation (FM) in that the frequency of the carrier is changed, but instead of altering the frequency in a ratio to the signal being modulated (thus creating a sort of “mini”-representation of the original wide-band signal in a more narrow band), phase modulation modifies the carrier at a more granular level, modifying the curve (or “phase”, roughly meaning the signal’s position on the curve over time) of each of the carrier’s individual cycles to encode the signal being modulated.

This substantially increases the “bandwidth” available to encode on the carrier and allows for a wide-variety of information to be modulated on a single carrier (usually on sub-carriers, some of which may have their own sub-carriers and… let’s back out of that rabbit hole before we get lost!) Suffice it to say, due to its ability to convey large amounts of data, phase modulation is used for all sorts of modern radio-based technologies such as Wi-Fi and GSM.

However, in the case of the Unified S-Band system, to ensure the carrier signal could also be reliably used for precise distance tracking, the phase modulation was subtle enough that the signal could be mistaken for double-sideband amplitude modulation (AM), providing a certain amount of “security through obscurity”.

NASA was impressed by the demonstration and the technical qualities of the proposed system, and went ahead with its continued development. In practice, there were two signals, an “uplink” (using frequencies within the band from 2025-2120MHz) to the Apollo spacecraft, and a “downlink” (using frequencies from 2200-2290MHz) back to Earth.

The use of two separate frequency bands allowed for “full-duplex” operation, that is both the ground and the spacecraft could broadcast continuously, and consequently voice transmissions could not “walk over” each other.

Uplink voice and data transmissions were modulated on sub-carriers, at 30KHz and 70Khz respectively.

The downlink, on the other hand, had subcarriers at 1.25MHz (voice) and 1.024 MHz (telemetry data). The higher frequencies had larger bandwidth capabilities for the increased volume of downloaded data from the spacecraft – however this still wasn’t enough for the television signal, at least not at the resolution and framerate that NASA wanted.

While video had been included as a slice of the original phase-modulated S-Band specification, NASA was concerned that the choppy, blocky image would be uninspiring to the American home audience, and felt that taxpayers might think they hadn’t gotten value for money.

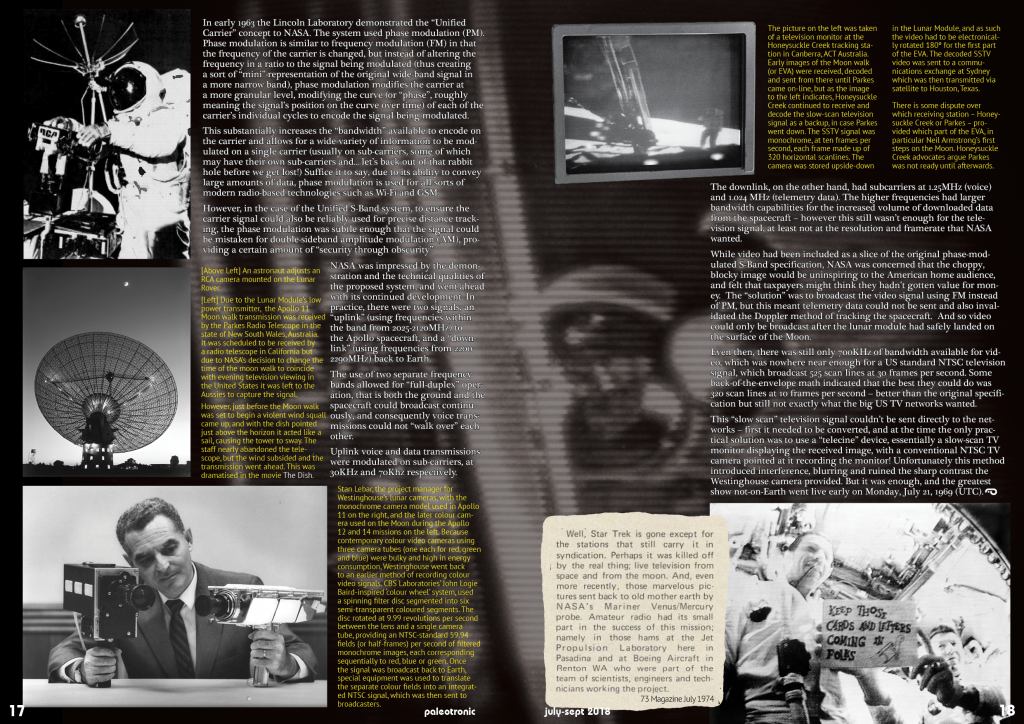

There is some dispute over which receiving station – Honeysuckle Creek or Parkes – provided which part of the EVA, in particular Neil Armstrong’s first steps on the Moon. Honeysuckle Creek advocates argue Parkes was not ready until afterwards.

The “solution” was to broadcast the video signal using FM instead of PM, but this meant telemetry data could not be sent and also invalidated the Doppler method of tracking the spacecraft. And so video could only be broadcast after the lunar module had safely landed on the surface of the Moon.

Even then, there was still only 700KHz of bandwidth available for video, which was nowhere near enough for a US standard NTSC television signal, which broadcast 525 scan lines at 30 frames per second. Some back-of-the-envelope math indicated that the best they could do was 320 scan lines at 10 frames per second – better than the original specification but still not exactly what the big US TV networks wanted.

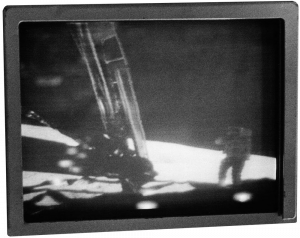

This “slow scan” television signal couldn’t be sent directly to the networks – first it needed to be converted, and at the time the only practical solution was to use a “telecine” device, essentially a slow-scan TV monitor displaying the received image, with a conventional NTSC TV camera pointed at it recording the monitor! Unfortunately this method introduced interference, blurring and ruined the sharp contrast the Westinghouse camera provided. But it was enough, and the greatest show not-on-Earth went live early on Monday, July 21, 1969 (UTC).

Be the first to comment